At the International Supercomputing Conference (ISC) 2021, the event for a consortium of high-performance computing (HPC), machine learning (ML) and data analytics goodness, Intel showcased a version of its next-generation Xeon Scalable processor, code-named ‘Sapphire Rapids’, with high-bandwidth memory (HBM).

This news definitely got our HPC team excited.

So what about Sapphire has them so thrilled? Let’s find out!

Accelerating HPC for the future.

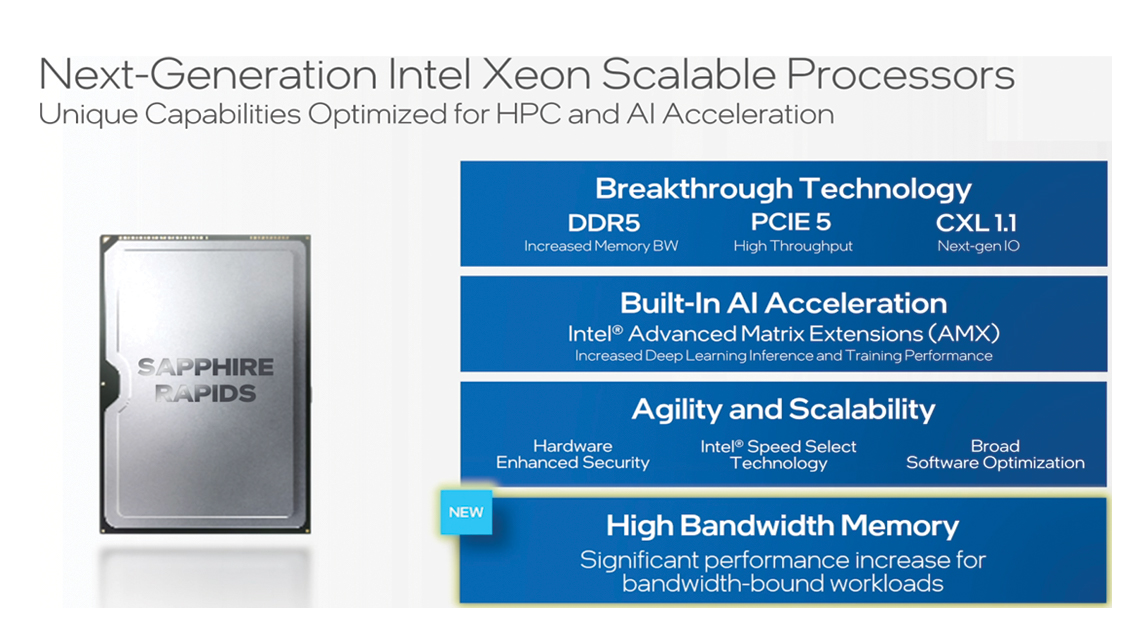

For starters, the new processor, with a transistor density of 100.8 million transistors per square millimetre built using 10-nanometre Enhanced SuperFin, will be jam-packed with industry-shifting technologies that will shake up the world of HPC. Sapphire Rapids will use the latest DDR5 DRAM, have PCIe 5.0 connectivity and support CXL 1.1 for next-gen connections. It will also support Crow Pass, the next generation of Intel Optane memory.

Confused by all these acronyms that the IT industry is so fond of? Fret not, we’ve got you covered here and here!

The brand new microarchitecture is designed to tackle the dynamic and increasingly demanding workloads in future data centres across compute, networking and storage.

Integrating HBM into the Sapphire Rapids processors will also provide a dramatic performance improvement for HPC applications that operate memory bandwidth-sensitive workloads. Users have the freedom to either leverage HBM (>1.2TB/s) alone to reduce the physical footprint of the processor, or use it in combination with DDR5 (<400GB/s), which offers an additional tier in memory caching.

Optimised for HPC and AI workloads.

Pushing forward the cutting-edge, Intel is also doubling down on its leadership position in the realm of artificial intelligence (AI) by incorporating its Advanced Matrix Extensions (AMX).

This addition is expected to help accelerate matrix-intensive workflows such as ML, deliver a significant performance increase for deep learning inference and training where many algorithms run with reduced precision BFloat16 rather than single or double precision arithmetic.

And the treats just keep on coming, Intel is also including its Data Streaming Accelerator (DSA).

The major acceleration engine optimises streaming data movements used by high-performance storage, network and data-processing applications. This is achieved by reducing the amount of overhead incurred when performing the operations on the general CPU cores.

Ushering in a new era of scientific innovations.

Customer momentum is already building for Sapphire Rapids processors with integrated HBM.

It will be the brains at the heart of the Aurora supercomputer at Argonne National Labs and the Crossroads supercomputer at the Los Alamos National Labs.

Scientists there will perform exciting and data-hungry experiments ranging from mapping the human brain, to solving fusion and fission energy problems, to performing cosmological simulations at exascale to advance our knowledge of the universe.

“Workloads such as modelling and simulation (e.g. computational fluid dynamics, climate and weather forecasting, quantum chromodynamics), artificial intelligence (e.g. deep learning training and inferencing), analytics (e.g. big data analytics), in-memory databases, storage and others power humanity’s scientific breakthroughs,” noted an Intel announcement.

With production slated for the first quarter of 2022, industry excitement for the release is building.

It will be interesting to witness how Sapphire Rapids advances and evolves compute performance, workload acceleration, memory bandwidth and infrastructure management.

The cool new kit will no doubt catalyse the industry’s transition to cloud-based architectures and help bring data centres of the future to the horizon.