The circulation of blood in our vessels, the flow of a gentle stream around wooden bridge pillars, and the cool breeze whizzing past a car are examples of commonplace, but complex problems based on fluid dynamics.

Such problems can only be solved numerically. But due to the complexity of the modern world’s structures and models, fluid simulation must be done much more efficiently and precisely to accurately represent what we see and experience.

Traditionally, Navier-Stokes equations have been utilised to describe the motion of fluids. However, these equations only tackle the problem on a macroscopic scale. In multiphase or multicomponent systems, where particles are present in the fluid flow, Navier-Stokes equations are not sufficient.

New kid on the block.

Navier-Stokes’ thunder has been stolen by a newcomer called the Lattice Boltzmann methods (LBM), a class of computational fluid dynamics methods for fluid simulation. LBM has won the hearts of many computational physicists and engineers due to their simplicity, efficiency, stability and numerical accuracy in simulating complicated fluid dynamics on a more detailed, microscopic level. It can even be used in simulating the Schrödinger equation, extending its application to quantum computing.

BUT LBM calculations are incredibly data-intensive and prohibitively expensive to run.

Supercomputing turbocharges fluid mechanics simulations.

With the help of DUG’s high-performance computing as a service (HPCaaS) on the DUG McCloud platform, researcher Dr Robin Trunk from the Karlsruhe Institute of Technology conducted studies to improve the Homogenised Lattice Boltzmann method (HLBM), a specialisation of the LBM. His research team also evaluated the performance and accuracy of HLBM and compared it to other published methods.

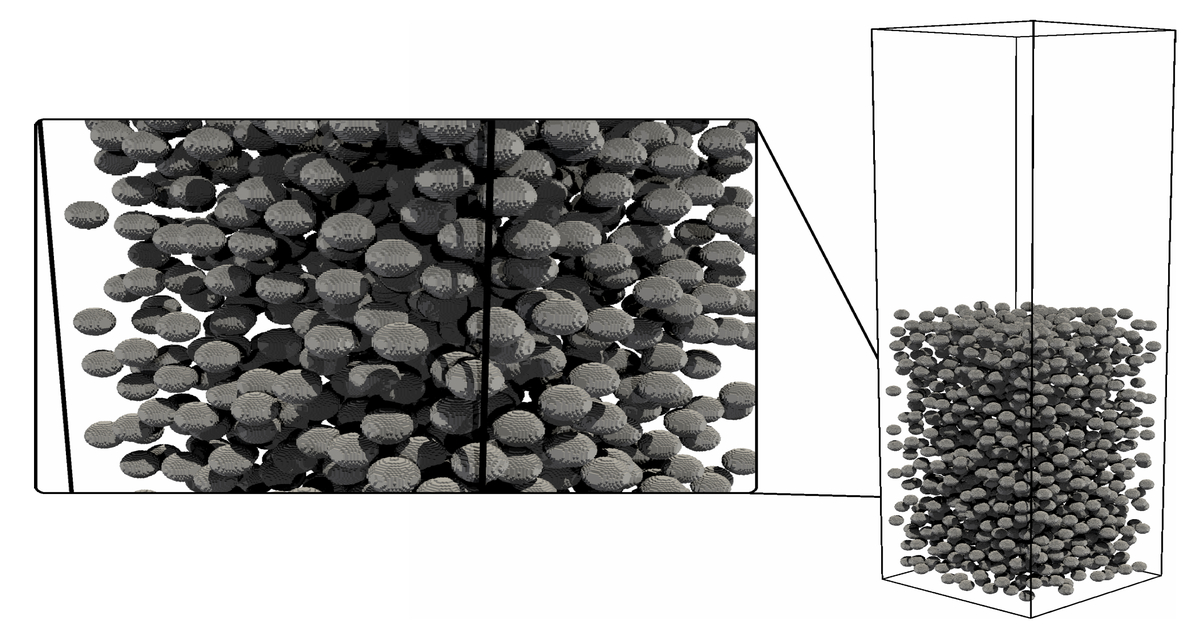

Furthermore, Dr Trunk’s research group also performed a separate study to understand how the shape of particles affects their settling behaviour. Through this work, they could identify the relevant parameters describing the particles’ shape, which allows them to better comprehend how solid particles settle. Such knowledge is paramount in the design of certain equipment that involves particle settling, such as laboratory centrifuges, or in the understanding of natural phenomena like the settling of sediments in rivers.

Typically only simple geometric shapes (think spheres and cubes) are considered in simulating particle-settling behaviour. But Dr Trunk’s research took into account arbitrarily shaped particles, geared towards real-world applications.

Due to the data-parallel nature of LBM, computations can be done on HPC systems with many computing cores since the computational load can be easily distributed. The researchers ran their simulation codes on DUG’s HPC, using an open-source C++ library developed by their research group, and evaluated the results using their Python scripts.

“It was a great experience doing research on DUG’s [HPCaaS] — it was easy to get started and if questions came up, the support was fast and solution-oriented,” commented Dr Trunk. “Through HPCaaS, it was a pleasure doing research without worrying about system administration or getting the software to work.”

DUG’s HPCaaS offers world-class, powerful compute backed by experts, supporting the entire HPC journey. We strive to simplify our clients’ access to our HPC, getting them up and running immediately, while our HPC experts are all ready to support you in code optimisation, data management and algorithm development.

All the IT complexities are handled for you, so that you can concentrate on your science, big data analysis, workflow or whatever rocks your socks, floats your boat or puts a smile on your dial!