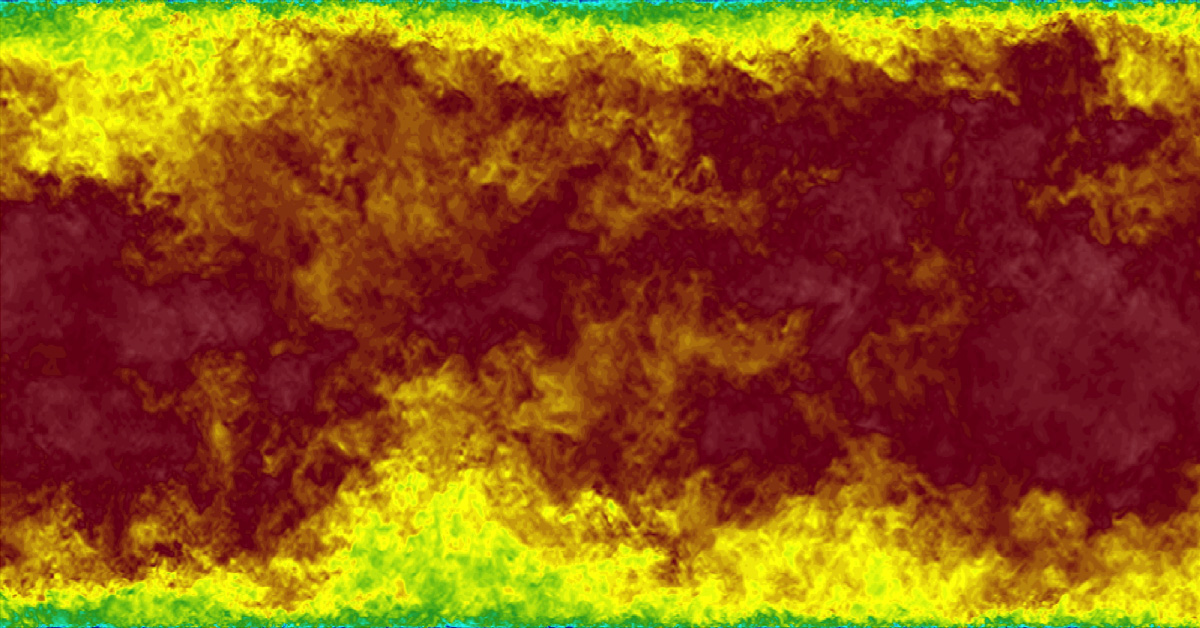

Hi-resolution computational fluid dynamics (CFD) is a huge consumer of HPC around the world. We recently ran some test simulations for Dr. Reymond Chin and Dr. Byron Guerrero Hinojosa from the University of Adelaide to test DUG McCloud’s capabilities on the massive simulations.

After working with our HPC experts and tuning Nek5000 for DUG McCloud’s cores, memory and high-bandwidth low-latency network, the code ran on between 24000 to 64000 cores and at up to twice the speed of other platforms – with the best bang-for-buck at 28000 cores, using over 128 terabytes of ram!

A resounding “Yes!”.

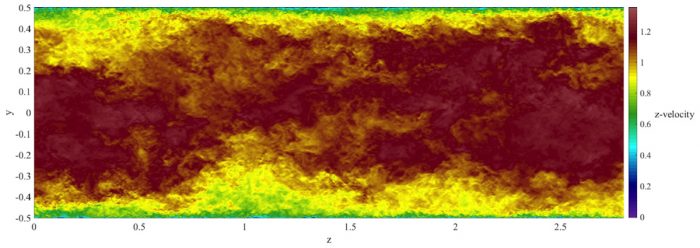

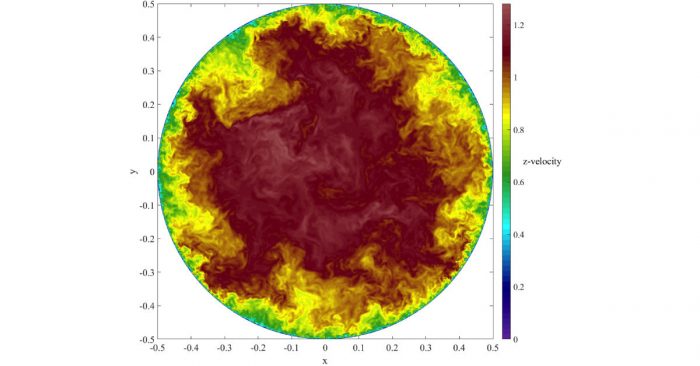

Dr Byron Guerrero Hinojosa explained the project as follows: “In the actual world, several day-to-day and productive activities rely substantially on turbulent pipeflows. For instance, transport of water, steam, gas, air distribution networks, crude oil ducts, are only a few examples of daily applications of turbulent pipe flow.

“Despite its importance in engineered systems, much remains unknown about the physics of turbulent pipe flows, especially the physics that governs turbulence. In that context, this project aims to provide, for the first time, a fully resolved spatio-temporal dataset of turbulent pipe flow at industrial Reynolds numbers.

“The current numerical simulation is conducted for a bulk Reynolds number. The mesh size used in this calculation has approximately 20 billion grid points and has been parallelized over 32000 CPUs on the DUG supercomputer.

“The dataset will lead to technologies to suppress turbulence resulting in significant energy savings, which directly corresponds to massive CO2 emission reduction.”