Recent announcements and the release of the November Top500 list show an increasing number of systems using accelerator technology (eg. NVIDIA V100). However, the number 1 machine, Fugaku, doesn’t.

As Prof. Satoshi Matsuoka tweeted “Indeed, had we went for 1024bit SVE and slightly larger machine (as such a little larger budget) we will have hit Linpack Rmax Exaflop; but the design objective was to excel in the apps, and our design analysis indicated that 512 bit was the sweet spot.”

In other words, they could have designed a machine with twice the FLOPS and chose not to.

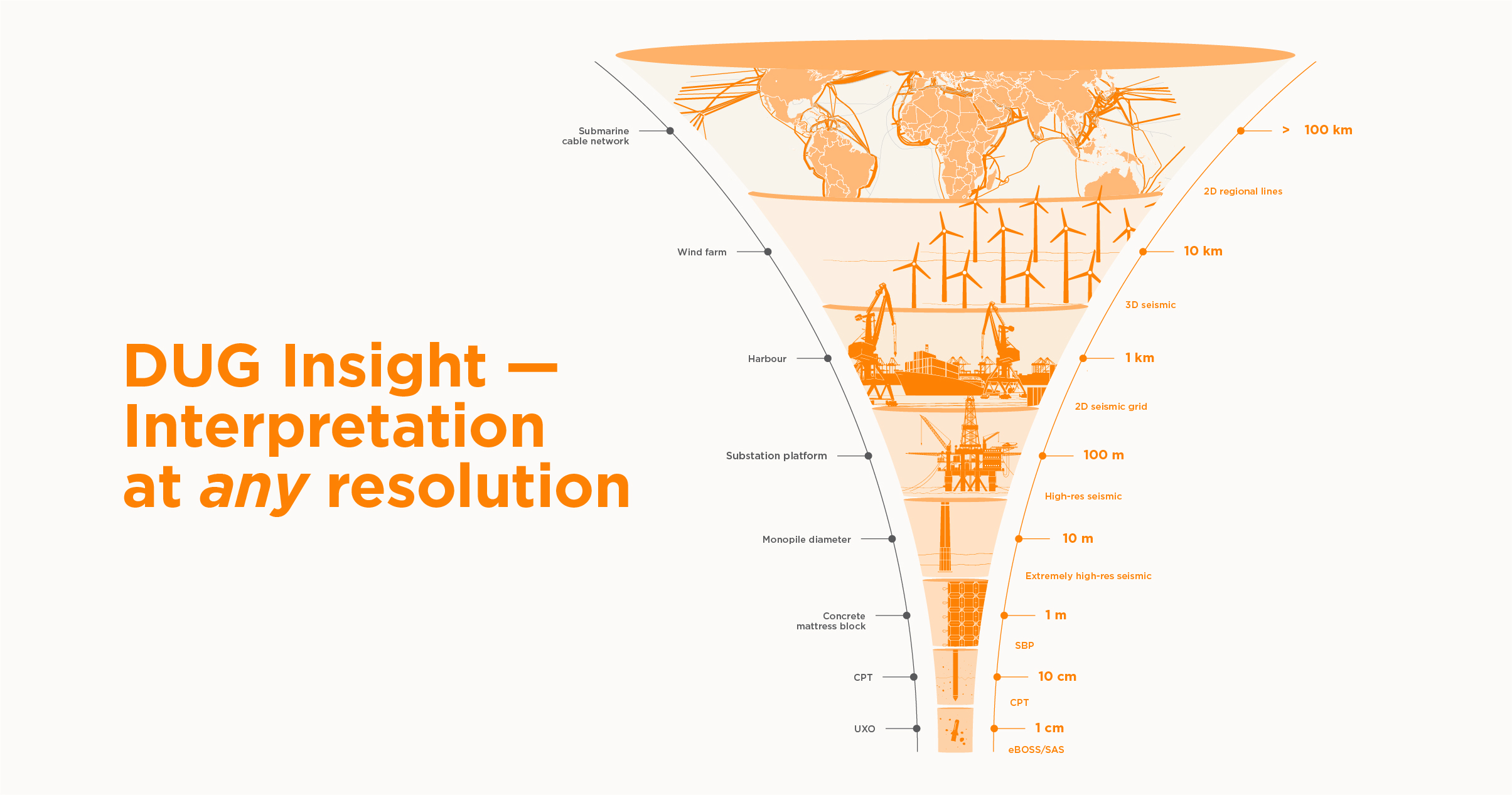

To understand why, consider the recent GPU technologies from NVIDIA, AMD and Intel. Over the next couple of years all will have compute engines delivering 40TFlops per device, with many devices per server. These will employ HBM (high bandwidth memory) and deliver TB/s of bandwidth. However the bandwidth isn’t keeping up with the FLOPS and all these devices sit on buses (commonly PCIe) which haven’t seen significant improvements in bandwidth for years.

The FLOPS count is climbing dramatically but memory, bus, and network bandwidths are not accelerating anywhere near as quickly.

The current x86 champion for memory bandwidth is the four year old Intel Xeon Phi Knights Landing (KNL). This device delivers just over 3TFlops of compute and has around 450GB/s of memory bandwidth (150GB/s/TFlop).

The current NVIDIA GPU’s deliver over 7.8TFlops of performance with around 900GB/s of memory bandwidth (115GB/s/TFlop).

The just-announced NVIDIA A100 delivers 9.7TFlops of performance with 1555GB/s of memory bandwidth (160GB/s/TFlop).

The recently-launched AMD GPU delivers 11.5TFlops of performance with 1200GB/s of memory bandwidth (104GB/s/TFlop).

The next-generation Intel Xe GPU will deliver around 40TFlops of compute and looks to have around 4000GB/s of memory bandwidth (100GB/s/TFlop).

Fugaku A64FX has around 3.3TFlops of performance with around 1000GB/s of memory bandwidth (300GB/s/TFlop).

The bandwidth constraints of the GPU-based systems are compounded by the interconnect (typically PCIe) used between the GPU, CPU and other devices. Many systems are stuck on PCIe v3 with some new systems transitioning to PCIe v4, which has double the bandwidth. PCIe v5 won’t be available for many years yet. To address this challenge, some vendors are developing their own connecting buses such as SXM2, NVLink and Infiniti Fabric.

Most of the supercomputers on the Top500 are designed to function as a single compute engine. In other words, a single application runs across thousands of servers concurrently. The network connecting the compute servers is critical to the performance of the application. The current crop of networks is delivering around 100-200Gb/s of bandwidth per node. BUT the node performance is skyrocketing. Summit (Number 2 on the Top 500 List) is running 6 GPU’s per node with only 200Gb/s of network bandwidth.

Again, Fugaku chose an entirely different route.

Fugaku 3TFlops/node with up to 400Gb/s gives 133Gb/s/TFlop of network bandwidth.

Summit 49TFlops/node with up to 200Gb/s bandwidth gives 4Gb/s/TFlop of network bandwidth.

Fugaku’s unique approach, building a more balanced system, allows researchers to more easily access the huge number of FLOPS available. This is evident by the high ratio of RMax to RPeak, which is used to measure the percentage of peak performance the benchmark achieved:

Fugaku 82%

Summit 74%

Fugaku is a truly remarkable system and, like its predecessors (Earth Simulator, K Computer), is destined to be number one for an extended period of time and deliver exceptional performance to the Japanese research community.