Though you might not be able to kick back and relax in a completely autonomous vehicle today, automakers all over the world are racing to place one on the market.

The Technoking of Tesla might be the most eager to do so.

Elon Musk has recently supercharged Tesla’s self-driving artificial intelligence (AI) technology with a powerful new supercomputer, which he expects will drive his Teslas to the finish line at full throttle (without human supervision).

New supercomputer with mad specs.

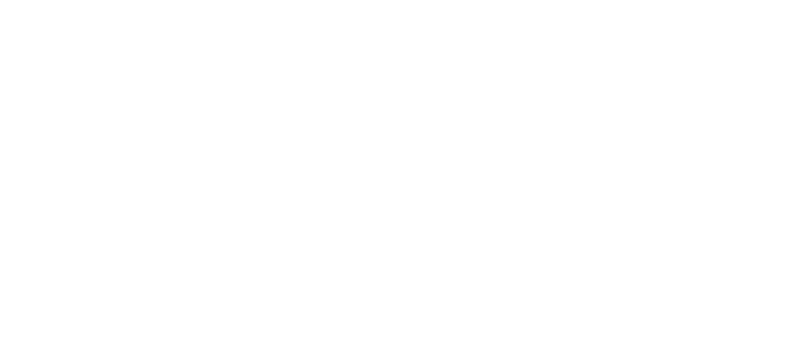

At the Conference on Computer Vision and Pattern Recognition (CVPR) 2021, Andrej Karpathy, Tesla’s Head of AI, unveiled their third supercomputer cluster.

They claimed some fairly mad specifications on this brand new supercomputer:

- 720 nodes of 8x NVIDIA A100 80GB – a total of 5760 GPUs

- 1.8 exaFLOPS (720 nodes * 312 TFLOPS-FP16-A100 * 8 GPU/nodes)

- 10 petabytes of “hot tier” NVMe storage @ 1.6 terabytes per second

- 640 terabytes per second of total switching capacity

HPCWire estimated that these specs should catapult the supercomputer to the fifth most powerful computer in the world, but Karpathy stated his team has yet to run the specific benchmark necessary to place it in the TOP500 Supercomputing rankings.

You can watch his full CVPR 2021 presentation here.

Foreshadowing Dojo.

This new supercomputer appears to be the developmental prototype version of Tesla’s upcoming new Dojo supercomputer, slated for release by the end of this year.

Since 2019, the self-proclaimed “Imperator of Mars” has been teasing a supercomputer to improve Tesla’s machine learning architecture, called a neural network, under the Dojo Program.

He also hinted that Dojo will be the world’s fastest supercomputer – capable of an exaFLOP – besting Japan’s Fugaku, which is currently #1 on the TOP500 list at 442 petaFLOPS.

It sounds very powerful. But will it look as cool as the Cray-1? Stay tuned for its release!

The Cray-1 supercomputer with its “couch” design. Photo credit: Ellinor Algin/Swedish National Museum of Science and Technology

Training Autopilot.

Tesla’s Memelord needs all this computing juice to power both the interior and exterior of his fleet of self-driving cars.

On the inside, Tesla needs powerful computers to run its autonomous driving software. On the outside, it requires massive supercomputers to train its self-driving software.

Eight surround cameras, each running at 36 frames per second, are mounted on each Tesla vehicle to provide 360 degrees of visibility around the car at up to 250 metres of range. The vehicle’s advanced driver assistance system, imaginatively called Autopilot, gathers information from all Tesla vehicles on the road and feeds it to the brains built in the car, where they study this data and make predictions to improve Autopilot’s driving performance.

At the same time, this mountain of data is shared on Tesla’s neural network to determine if the driving decisions made were accurate, or if any data was misinterpreted. In an event where the data was indeed misidentified, it will then be continuously run through supercomputers to tweak its behaviour, which effectively trains the ever-improving Autopilot AI model.

“We have a neural net architecture network and we have a data set, a 1.5 petabytes data set that requires a huge amount of computing. So I wanted to give a plug to this insane supercomputer that we are building and using now. For us, computer vision is the bread and butter of what we do and what enables Autopilot. And for that to work really well, we need to master the data from the fleet, and train massive neural nets and experiment a lot. So we invested a lot into the compute”, commented Karpathy during the conference.

Still a bumpy road ahead.

Speaking of computer vision, not everyone is confident in Tesla’s decision to go all-in on vision-only autonomous driving. I mean, what happens in fog? Or this clever car trap?

Even the Technoking himself came to a realisation that self-driving cars are hard to develop!

Carmakers working on autonomous technology will typically use an amalgamation of LiDAR, radar and cameras to create a well-rounded sensor fusion module, ensuring that their vehicles are as safe as possible on the road. An example of that would be Volvo’s next-gen XC90 SUV.

While using only cameras to perfect vision-based autonomy is difficult, Tesla believes that it’s the key to affordable and reliable self-driving cars.

With Tesla’s new supercomputers giving a huge boost to its self-driving software, it will be interesting to see if Tesla’s vision-based technology will prevail over its competitors or…run out of gas.