The term ‘big data’ is often used in reference to high-performance computing but is, more often than not, misunderstood. Firstly, and perhaps surprisingly, it doesn’t necessarily mean there is a lot of data. And even if you have an aweful lot of data, it isn’t really ‘BIG’ data.

If you type “Big data meaning” into your favourite search engine, you might end up with an explanation like this: “Extremely large data sets that may be analysed computationally to reveal patterns, trends, and associations, especially relating to human behaviour and interactions.”

The key to this definition are the words: “reveal patterns, trends and associations”.

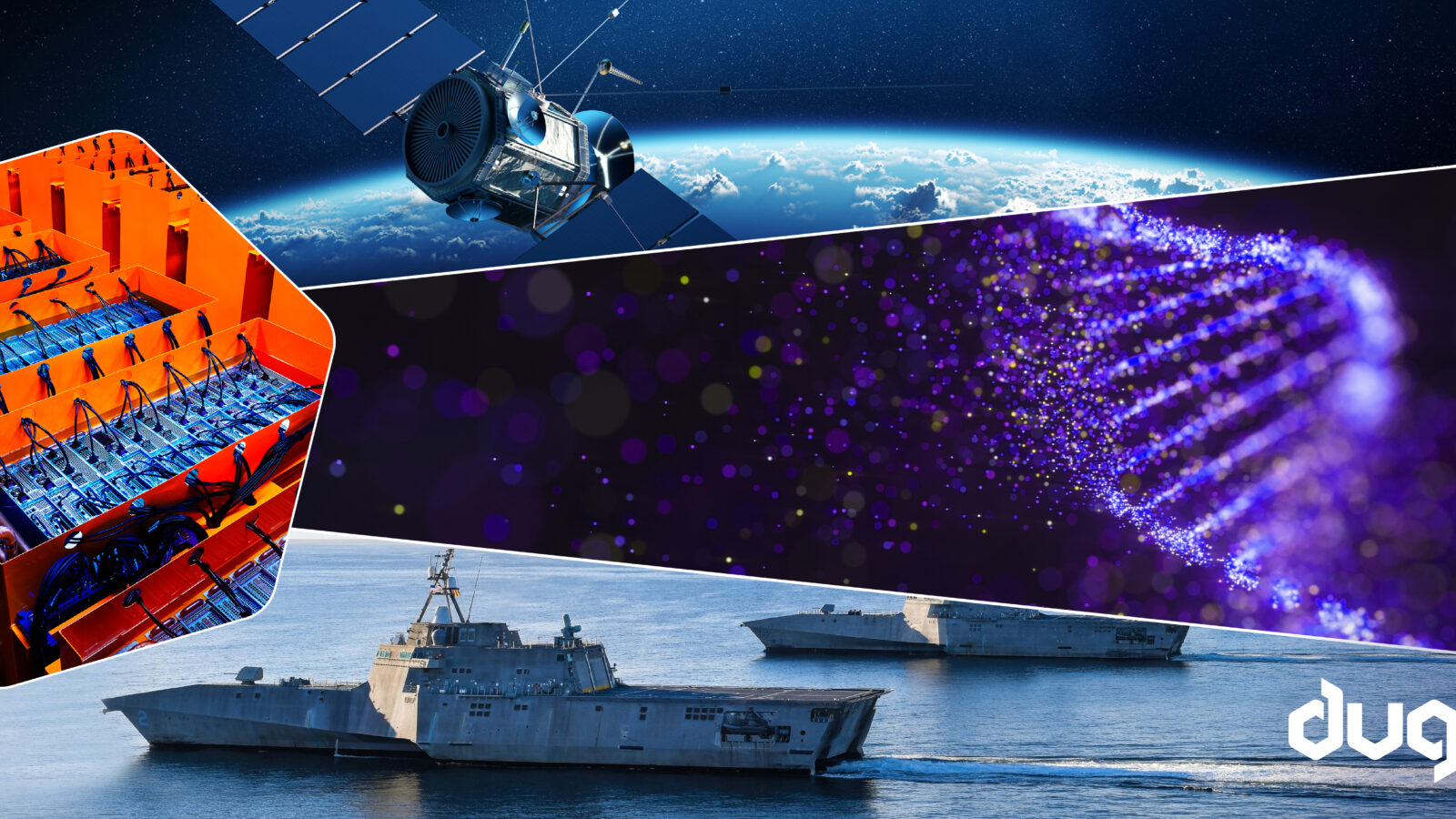

There are many industries that generate vast quantities of data, such as seismic processing and astrophysics which generate and process millions of gigabytes of data annually. (Just take a look at the amount of data they expect to generate from the SKA telescopes – mindblowing!). But they wouldn’t traditionally be called ‘big data’, because they aren’t looking for patterns, trends and associations. They are generating images or models of the physical world.

On the other hand, genome sequencing and the processing to reassemble the genes would be considered ‘big data’, even though each individual process might only involve a few gigabytes of data. Reassembly is looking for common and overlapping patterns in the thousands of small files and aligning them to generate one continuous sequence.

Searching a large database for information on, say, financial records looking for patterns that indicate fraud is considered ‘big data’. Whereas pulling model parameters from a database to feed into a large complex fluid-flow model, which will generate 1000’s of gigabytes of output, is not.

BUT processing millions of gigabytes of surveillance camera images looking for a person is ‘big data’.

‘BIG data’ is more about how you use the data rather than how large the data set is.

In the OpenSource software industry they say “free as in speech”, similarly it is “BIG data as in BIG Brother”.