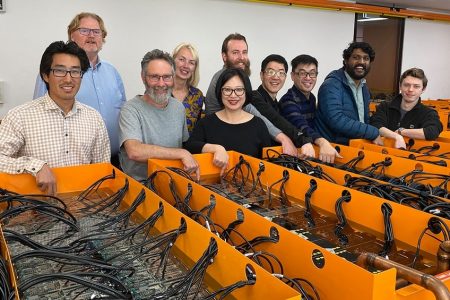

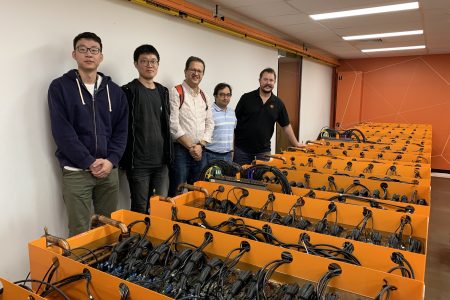

Recently DUG hosted the Perth Linux User Group – PLUG – for a meetup.

We are a huge user of Linux with thousands of servers and desktops running the OS to deliver our DUG McCloud high-performance compute as-a-service (HPCaaS) offerings.

PLUG’s members were keen to learn more about the challenges of running high-performance computing at extreme scale, and how they vary to running your favourite distro on your home server or desktop.

First, you need highly skilled administrators and support staff, with an eye for detail and strong problem solving skills.

Since all servers are almost identical, a small problem with one system could actually point to a small problem on thousands of servers.

To combat this, nothing can be performed by hand. You need fully-automated and distributed systems.

A sophisticated monitoring system is absolutely necessary – you cannot rely on your administrators discovering problems by chance.

The monitoring system also needs to be capable of fixing many problems – reducing the amount of human intervention.

Layered on these administrative systems are a sophisticated, high bandwidth, super low-latency network and a massively distributed storage system, capable of handling the petabytes of data thrown at it.

To achieve such high bandwidths and low latencies you need to employ technologies such as Kernel-bypass or RDMA (Remote Direct Memory Access) – where a user-process on one server can inject data into the user-process running on another server, without all the usual safety and management provided by the Linux kernel.

As with Formula One race cars, the ‘HP’ in ‘HPC’ drives technology, innovation, and new ideas.

At DUG, it’s our unique and novel network, in-house developed systems, and a patented cooling system that puts us at the forefront of HPC delivery.