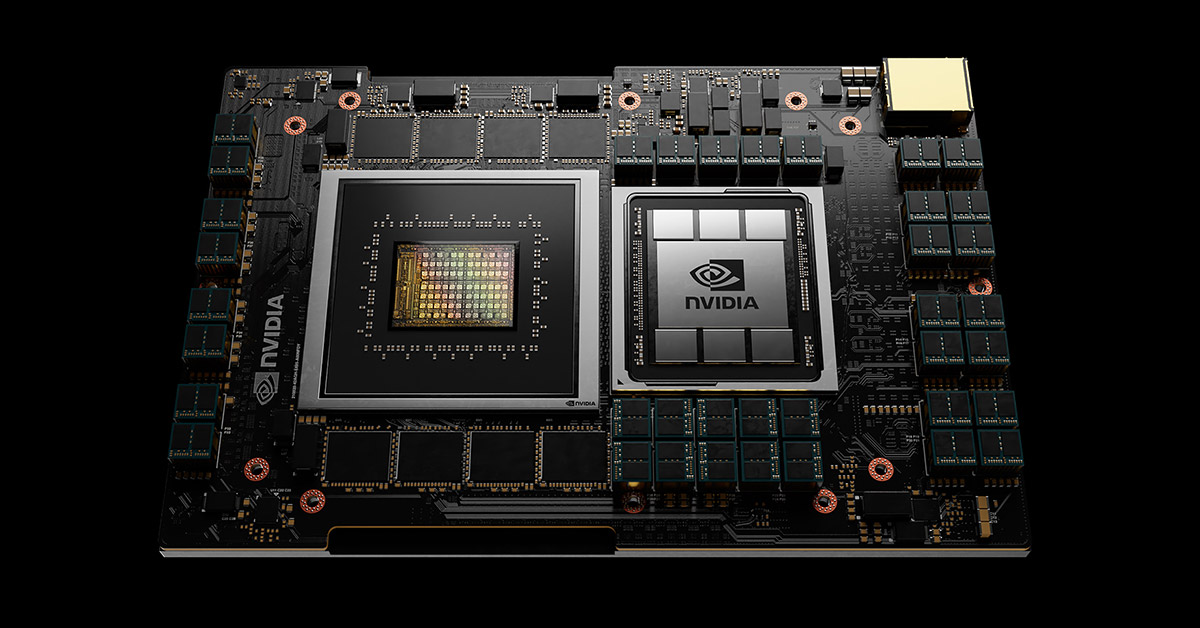

NVIDIA made a slew of announcements at its GTC (GPU Technology Conference) , most notably that it is entering the CPU business with an Arm-based CPU (called Grace in honor of the famous American programmer Grace Hopper), and that it has a brand spanking new DPU.

Entry into the CPU business is going to ruffle feathers. Arm has been a sleeping giant in the general CPU and HPC space. Arm owns nearly the entire phone/tablet space and is installed on nearly every single PC motherboard as the BMC/BIOS brains . Arm is basically everywhere, used by everyone… except as the main CPU and certainly not in the HPC space… until recently. The world’s number one HPC system Fugaku is based on a Fujitsu Arm CPU called A64FX, and following the incomplete acquisition of Arm – NVIDIA is building an Arm-based CPU. This will put the cat amongst the pigeons! It will be a disruptive move that will be felt in the IT sector for a long time.

But what is the other piece of hardware NVIDIA announced? The DPU ? And how does it relate to a CPU, GPU, TPU, NPU, VPU, IPU, QPU, DSP, FPGA and other such devices? Why do computer companies like acronyms of acronyms? And why is my computer so slow these days?

I discussed these briefly in my HPC Hour talk a few weeks ago:

But before I go on – here’s a quick refresher on some of HPC’s favourite acronyms.

CPU – central processing unit – the brains of most computers. The general purpose computing engine that runs your excel spread sheet, powers your web browser and acts as the go-between for storage, memory, network, GPU and your files.

GPU – graphics processing unit – the device that handles all the processing for displaying graphics on your screen. These have slowly become general compute workhorses for matrix and vector computations.

TPU – Tensor processing unit – a special device for doing low-precision tensor arithmetic (256 x 256 matrix vector multiplication). Really useful for AI/ML.

NPU – Neural network processing unit – a special device tuned for low-precision neural network calculations.

VPU – Vision processing unit – a special device to assist AI processing for vision-based algorithms (might be directly attached to cameras).

IPU – Intelligence processing unit – a specialised device to do massively parallel AI calculations.

QPU – Quantum processing unit – a completely new and novel processing engine that promises to deliver an enormous leap in computing power. They function completely differently to current computing hardware… and you can’t actually buy one yet.

DSP – Digital signal processor – a microprocessor designed to process digital signals on a continuous streaming nature eg. sound or video.

FPGA – Field programmable gate array – a device which allows you to effectively design your own computing device. You get an array of very basic processing elements that you can virtually wire together to form a static algorithm.

DPU – Data processing unit – a general programmable device to processing streaming data like network traffic (like a DSP but more general).

As a general rule of thumb, the *PU devices are like a CPU but with a heap of un-necessary features removed and useful features beefed up – depending on their target. They tend to be more general than the DSP and FPGA type devices. For example, there is no point in having high-precision mathematics (atmosphere modelling) built into your CPU if you don’t intend to do high-precision mathematics (AI/ML workloads).

The IT industry loves acronyms of acronyms because typing “graphics processing unit technology conference” takes a lot of letters and screen real estate.

Your computer is slow because, under the covers, it is doing an incredibly complicated number of tasks and processing a vast amount of data whilst using an incredibly small amount of energy – all without the hardware vendor knowing what you want to do. So they tend to allocate resources in a general sub-optimal way (for you). By introducing the vast array of different processing elements above, they can start to deliver more specific resources to solve your task as quickly as possible.

Tuning the hardware to your needs can have a dramatic impact on what you can achieve. A TPU will run your AI/ML workloads incredibly fast, but will not be able to Excel.

All we can say is OMG.