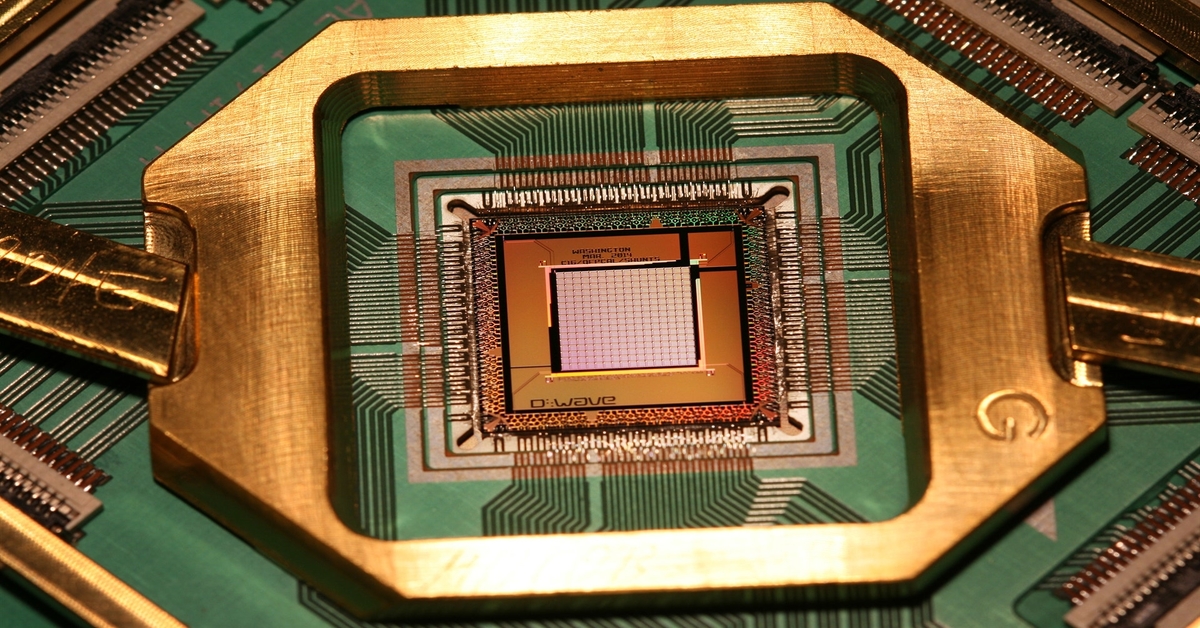

A practical quantum computer – one that operates on the laws of quantum physics – is still in its infancy. But it has the potential to revolutionise the world of computing.

From highly efficient batteries, to precisely targeted medicines and delivering packages more efficiently to more intelligent artificial intelligence, these are just a few of the myriad scientific outcomes practical quantum computers could generate.

But could quantum computers truly hold up to their promise?

Challenging the quantum supremacy Google claimed with its Sycamore processor, a research duo from Beijing released a paper earlier this year, demonstrating a more efficient and accurate method to simulate quantum computing with traditional processors.

Now, there’s another challenger in the midst…

Classical vs quantum.

Rather than running the algorithm on an advanced quantum processor, two physicists from the École Polytechnique Fédérale de Lausanne and Columbia University employed a classical machine learning algorithm on a conventional computer, closely resembling the behaviour of near-term quantum computers.

Published in the journal npj Quantum Information, the development of the traditional computer hardware in simulating quantum devices utilises a neural network based on the Quantum Approximate Optimisation Algorithm (QAOA).

It’s built on the idea that sophisticated machine learning tools can also be deployed to understand and reproduce the inner workings of a quantum computer.

QAOA? WHAT?

The dauntingly named QAOA is a complicated quantum algorithm used to solve optimisation problems on a hybrid setup consisting of a classical computer and a quantum co-processor. The method is capable of processing a highly complex set of problems and coming up with a solution that is derived from countless possibilities – like a quantum speedup.

Prototypical quantum processors like Google’s Sycamore are restricted to performing noisy and limited operations. With a hybrid setup using these QAOA algorithms, physicists hope to ameliorate some of the systematic limitations in accuracy and stability while still being able to harness the potential of quantum behaviour.

In the physicists’ study, they successfully simulated 54 qubits with four QAOA layers, without the need for large-scale computational resources.

More importantly, the researchers have found an answer to an important open question in their study: Whether it is possible to run algorithms on current and near-term quantum computers that provide a significant benefit over the traditional algorithms for practical applications.

For now, the answer is still there is no definite answer. But they’re also driven to understand the limitations of simulating quantum systems using classical computing, which is also evolving rapidly.

Eventually, the researchers hope that their approach will inspire new quantum algorithms that are not just useful, but also tough for classical computers to simulate – truly sparking the quantum era.