NVIDIA touts the A100 Tensor Core Graphics Processing Unit (GPU) as the world’s most advanced deep learning accelerator. It delivers the performance and flexibility you need to build intelligent machines that can see, hear, speak, and understand your world.

Powered by the latest NVIDIA Ampere architecture, the A100 delivers up to five times more training performance than previous-generation GPUs. Plus, it supports a wide range of artificial intelligence (AI) applications and frameworks, making it a great choice for any deep-learning deployment.

Battle Royale: GPU edition.

Recently Intel and AMD have been spruiking their up-and-coming products that take the compute-power fight to NVIDIA.

But how will they stack up?

A recent report by the Center for Efficient Exascale Discretizations (CEED), a research partnership between two U.S. Department of Energy laboratories and five universities, put NVIDIA’s A100 and AMD’s next-generation MI250x GPUs head-to-head. The findings provide food for thought for many in the realms of high-performance computing (HPC), AI and machine learning.

Primarily, they showed that the A100 GPUs are generally faster on real-world applications than the MI250X GPUs. Despite the latter offering more than 2.5x the theoretical performance.

But why?

They came to the conclusion that NVIDIA’s advanced software stack and decade-head-start (in training developers, etc.) provide a powerful edge in allowing applications to effectively leverage the performance of the GPU.

Also, despite having more floating-point operations per second (FLOPS), the MI250X GPUs are still connected to the computer with the same amount of bandwidth as the A100 GPUs. This bottleneck can starve the GPUs of data, limiting what you can achieve with them. With PCIe 5.0 (read its benefits here) on the horizon, offering more than double the bandwidth, will we see the AMD GPUs take off? Time will tell…

Intel’s next-generation GPUs, with the Xe architecture, are slowly coming to market. Their flagship product, the Ponte Vecchio GPU (used in Argonne National Laboratory’s Aurora exascale supercomputer) offers more than 2.5–3.3x the performances of MI250x and A100 (based on FP16 which is considered to be the optimal precision for AI workloads).

NVIDIA has also recently announced the H100 Tensor Core GPU, which promises to have 2.5–3x the performance of the A100, matching AMD’s and inching closer to Intel’s. With their mature software stack will the H100 be the hardware to have?

For a neat comparison between NVIDIA’s upcoming H100, Intel’s upcoming Ponte Vecchio and AMD’s MI250X, check out the table at the end of this article.

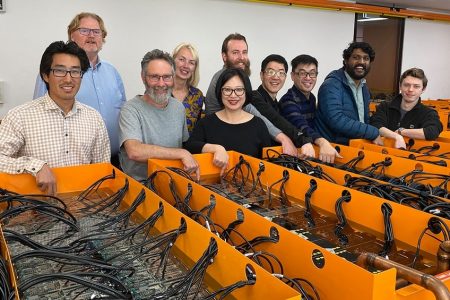

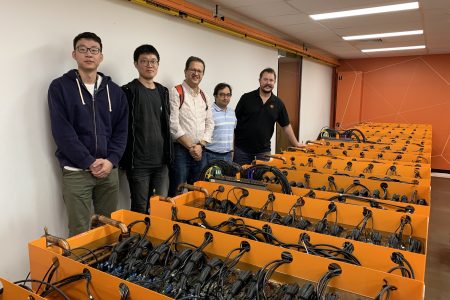

Power-up with DUG HPC.

We were a proud pre-release partner of NVIDIA’s A100 80GB PCIe GPU last year.

Now installed on and optimised for our HPC system, these GPUs provide the highest memory bandwidth and raw performance to deliver a step-change in our research and production GPU solutions. The GPUs’ robust tooling aligns perfectly with our agile, responsive approach to emerging client needs.

Come experience the power of the NVIDIA A100 80GB on our green HPC!

With a focus on data sovereignty and security, coupled with our dedicated HPC as a service (HPCaaS) support and domain-specific expertise, we strive to help you achieve higher throughput and faster results, accelerating the translation of your research and the commercialisation of your IP assets.

Drop us a line at [email protected] to have a chat with us.