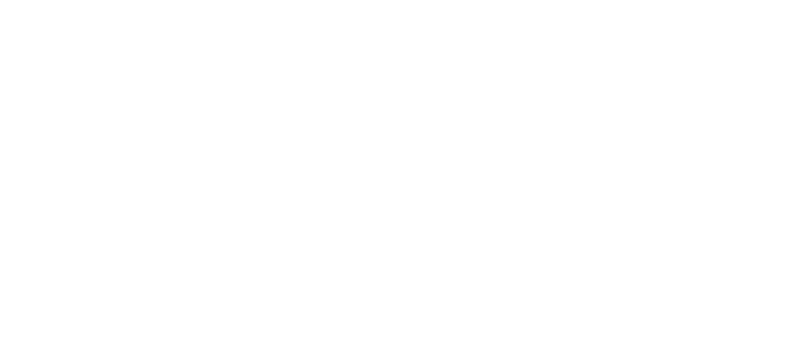

Earthquake prediction has been the long-sought Holy Grail of seismology—the branch of science associated with earthquakes and related phenomena.

For a long time, scientists have been pouring a lot of effort into achieving the elusive ability to predict exactly when and where a major quake will occur, and what magnitude it will be. So far, they’ve all come up short.

But that may soon cease to be true.

Slipping away.

Dr Paul Johnson, a geophysicist at Los Alamos National Laboratory in New Mexico, led a team to develop a tool that might render earthquake forecasting a pipe dream no more. As do so many scientific endeavours these days, the research team’s approach relies on artificial intelligence (AI) in the form of machine learning (ML). Akin to the function of our nervous system and how it learns new things, neural networks are implemented in their study, drawing experience from training data to teach them what to look out for. The application of ML has seen great success recently, supercharging research and development in fields ranging from seismic processing to protein prediction and even beer brewing.

But for seismologists, the use of ML is still in its infancy. Adding to the difficulty is the lack of quantitative data—quake magnitudes, shaking intensities, etc.—available for stick-slip earthquakes, which are the most common quake phenomena.

Huge earthquakes are caused by the movement of geological faults at or near the boundaries between Earth’s tectonic plates—and this is where researchers look for data. But for stick-slip quakes, the process preceding the catastrophic slippage takes a very long time, with very little movement on a fault as strain accumulates—essentially starving the ML program of data to build an effective prediction model. To study earthquakes properly, researchers need to document them as they happen. And without a time machine, seismologists remain in a conundrum.

Dr Johnson turned to a different type of seismic activity—slow-slip quakes. These events, similarly caused by the movement of tectonic plates, are stretched over hours, days and even weeks, as opposed to a matter of seconds in stick-slip events. These phenomena are treasure troves for researchers—a panoply of data points can be produced from these elongated processes, which could better train the neural network to predict seismic activity.

The research team’s ML system has already shown predictive capabilities in the Pacific Northwest’s Cascadia Subduction Zone. Listening to 12 years of the seismic soundtrack emanating from the slow fault movements, the system was able to seek patterns to hindcast (recreate past events) past slow-slip events based on the seismic signals that preceded them. In other words—in reality, the team could have predicted what would happen in a week or so.

On to the real world.

While Dr Johnson’s work showed that ML techniques do indeed work with seismic events (slow-slip), extending this prediction to earthquakes (stick-slip) would require them to compensate for the lack of data. To fill this gap, the researchers simulated miniature earthquakes in a laboratory to emulate stick-slip events. Using the data collected, a fine-tuned numerical simulation of the lab quake is then created and further married with data from the real thing.

The result? An effective ML model that is effective at predicting when a lab quake will occur.

Moving forwards, Dr Johnson’s team intends to apply their earthquake forecasting to an actual geological fault—possibly the San Andreas. The combination of data from a numerical simulation of the fault with those from actual quakes will be used to train their ML system.

We’ll have to wait and see if they could use the model to hindcast seismic events not included in the training data. But if all goes well, seismologists might soon be able to get their hands on tools accurate enough to anticipate earthquakes.

And such an advancement would be nothing short of, pun intended, groundbreaking.